Dataset sampling

import pandas as pd

import seaborn as sns

import numpy as np

import matplotlib.pyplot as plt

custom_style = {"grid.color": "black", "grid.linestyle": ":", "grid.linewidth": 0.3, "axes.edgecolor": "black", "ytick.left": True, "xtick.bottom": True}

sns.set_context("notebook")

sns.set_theme(style="whitegrid", rc=custom_style)

# Read the dataset

df = pd.read_csv("../data/global-data-on-sustainable-energy.csv")

Statistical Relationship

1. Feature Correlation

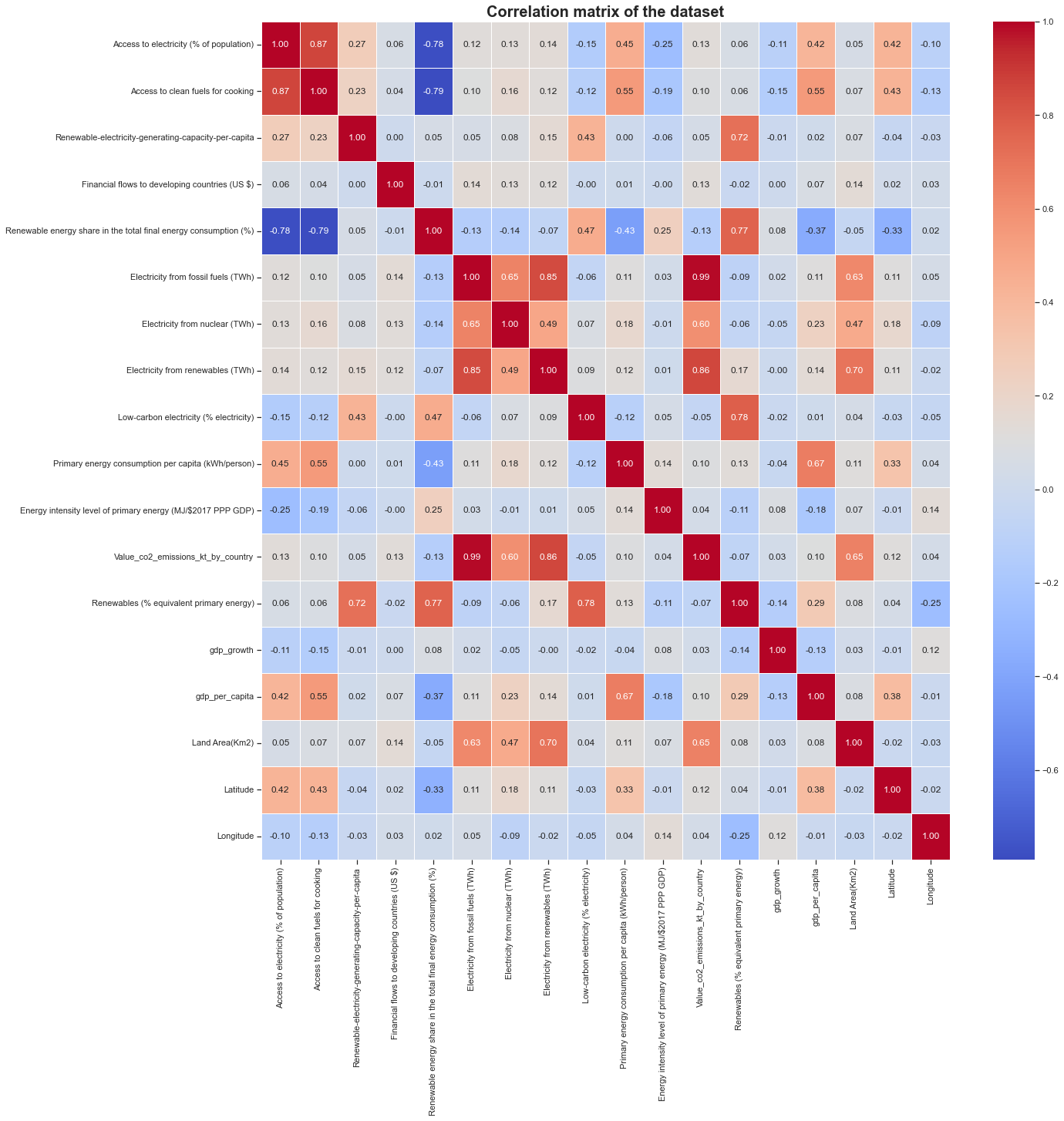

Correlation coefficients are indicators of the strength of the linear relationship between two different variables. A bigger circle means a higher correlation. The color of the circle indicates the sign of the correlation. A negative correlation (indicated by a blue color) means that the two variables move in opposite directions (when a variable is increasing, the other is decreasing). A positive correlation (indicated by a red color) means that the two variables move in the same direction (when a variable is increasing the other is also increasing).

plt.figure(figsize=(20,20))

corr_matrix = df.iloc[:, 2:21].corr()

sns.heatmap(corr_matrix, annot=True, fmt=".2f", cmap="coolwarm", linewidths=0.5)

plt.title("Correlation matrix of the dataset", fontsize=20, fontweight="bold")

plt.savefig("../img/correlation_matrix.png", dpi=200, bbox_inches="tight")

We can clearly see that some features are highly corrolated to each other but other are not. For instance the Financial flow to developing countries (US$) has only poor correlation with all other features. It would be hard to predict this feature based on the other ones. This is the same for the gdp_growth, Longitude, Energy intensity level of primary energy features. It could have been interesting to work with these features but we will not use them for the project.

An interesting thing to note is that the Latitude feature is quite positively well corrolated with the Access to electricity and Access to clean fuels for cooking features. This means that the more you go to the north, the more you have access to electricity and clean fuels for cooking. This is not surprising since the north is more developed than the south (look also for the gdp_per_capita correlation score). This is also the case for the Primary energy consumption par capita feature. Northern countries consume more energy than southern ones. They tend to have a higher impact on climate change than southern countries.

Now, we can set an objective for the project. We would like to predict:

- The CO2 emissions

- Primary energy consumption per capita

These are mainly regression problems.

Predicting the percentage of Renewables energy as primary energy could have been also interesting to do but the response feature is so poorly filled that we can’t use it as a response feature (cf the dataset description section).

2. Feature selection

Selection for the CO2 emissions prediction

Looking at the heatmap, we can clearly see that a linear relationship exists between some factors and the response (Value_co2_emissions_kt_by_country). Taking a threshold of 0.5 for the correlation score will make us takinng important feature to compute a regression after that.

co2_cols_to_keep = [column for column in corr_matrix.columns if abs(corr_matrix.loc['Value_co2_emissions_kt_by_country', column]) > 0.5]

co2_cols_to_keep

['Electricity from fossil fuels (TWh)',

'Electricity from nuclear (TWh)',

'Electricity from renewables (TWh)',

'Value_co2_emissions_kt_by_country',

'Land Area(Km2)']

df_co2 = df[['Entity', 'Year'] + co2_cols_to_keep]

df_co2.to_csv("../data/co2/co2_dataset.csv", index=False)

df_co2

| Entity | Year | Electricity from fossil fuels (TWh) | Electricity from nuclear (TWh) | Electricity from renewables (TWh) | Value_co2_emissions_kt_by_country | Land Area(Km2) | |

|---|---|---|---|---|---|---|---|

| 0 | Afghanistan | 2000 | 0.16 | 0.0 | 0.31 | 760.000000 | 652230.0 |

| 1 | Afghanistan | 2001 | 0.09 | 0.0 | 0.50 | 730.000000 | 652230.0 |

| 2 | Afghanistan | 2002 | 0.13 | 0.0 | 0.56 | 1029.999971 | 652230.0 |

| 3 | Afghanistan | 2003 | 0.31 | 0.0 | 0.63 | 1220.000029 | 652230.0 |

| 4 | Afghanistan | 2004 | 0.33 | 0.0 | 0.56 | 1029.999971 | 652230.0 |

| ... | ... | ... | ... | ... | ... | ... | ... |

| 3644 | Zimbabwe | 2016 | 3.50 | 0.0 | 3.32 | 11020.000460 | 390757.0 |

| 3645 | Zimbabwe | 2017 | 3.05 | 0.0 | 4.30 | 10340.000150 | 390757.0 |

| 3646 | Zimbabwe | 2018 | 3.73 | 0.0 | 5.46 | 12380.000110 | 390757.0 |

| 3647 | Zimbabwe | 2019 | 3.66 | 0.0 | 4.58 | 11760.000230 | 390757.0 |

| 3648 | Zimbabwe | 2020 | 3.40 | 0.0 | 4.19 | NaN | 390757.0 |

3649 rows × 7 columns

For this dataset, we will have:

| Features | Factors | Response |

|---|---|---|

| Year | ||

| Electricity from fossil fuels (TWh) | X | |

| Electricity from nuclear (TWh) | X | |

| Electricity from renewables (TWh) | X | |

| Land Area (Km2) | X | |

| Value_co2_emissions_kt_by_country | X |

Remember also that according to the dataset description, the Value_co2_emissions_kt_by_country has some missing values that we will have to deal with (drop rows or impute values).

Selection for the Primary energy consumption per capita

pe_cols_to_keep = [column for column in corr_matrix.columns if abs(corr_matrix.loc['Primary energy consumption per capita (kWh/person)', column]) > 0.4]

pe_cols_to_keep

['Access to electricity (% of population)',

'Access to clean fuels for cooking',

'Renewable energy share in the total final energy consumption (%)',

'Primary energy consumption per capita (kWh/person)',

'gdp_per_capita']

df_pe = df[['Entity', 'Year'] + pe_cols_to_keep]

df_pe.to_csv("../data/pe/pe_dataset.csv", index=False)

df_pe

| Entity | Year | Access to electricity (% of population) | Access to clean fuels for cooking | Renewable energy share in the total final energy consumption (%) | Primary energy consumption per capita (kWh/person) | gdp_per_capita | |

|---|---|---|---|---|---|---|---|

| 0 | Afghanistan | 2000 | 1.613591 | 6.2 | 44.99 | 302.59482 | NaN |

| 1 | Afghanistan | 2001 | 4.074574 | 7.2 | 45.60 | 236.89185 | NaN |

| 2 | Afghanistan | 2002 | 9.409158 | 8.2 | 37.83 | 210.86215 | 179.426579 |

| 3 | Afghanistan | 2003 | 14.738506 | 9.5 | 36.66 | 229.96822 | 190.683814 |

| 4 | Afghanistan | 2004 | 20.064968 | 10.9 | 44.24 | 204.23125 | 211.382074 |

| ... | ... | ... | ... | ... | ... | ... | ... |

| 3644 | Zimbabwe | 2016 | 42.561730 | 29.8 | 81.90 | 3227.68020 | 1464.588957 |

| 3645 | Zimbabwe | 2017 | 44.178635 | 29.8 | 82.46 | 3068.01150 | 1235.189032 |

| 3646 | Zimbabwe | 2018 | 45.572647 | 29.9 | 80.23 | 3441.98580 | 1254.642265 |

| 3647 | Zimbabwe | 2019 | 46.781475 | 30.1 | 81.50 | 3003.65530 | 1316.740657 |

| 3648 | Zimbabwe | 2020 | 52.747670 | 30.4 | 81.90 | 2680.13180 | 1214.509820 |

3649 rows × 7 columns

For this dataset, we will have:

| Features | Factors | Response |

|---|---|---|

| Year | ||

| Access to electricity (% of population) | X | |

| Access to clean fuels for cooking | X | |

| Renewable energy share in the total final energy consumption (%) | X | |

| Primary energy consumption per capita (kWh/person) | X | |

| GDP per capita | X |

3. Higher dimension relationship

CO2 emissions prediction

# This prints the number of rows with at least one missing value

df_co2.shape[0] - df_co2.dropna().shape[0]

548

This dataset does not contain a lot of missing values. If we drop rows that are containing we still have more than 3000 samples to train our model. As we have 5 features, this number if samples is sufficient to train a regression model. There is no need of finding higher dimensional relationship.

Renewable energy consumption prediction

df_pe.shape[0] - df_pe.dropna().shape[0]

622

Same result are the CO2 emission dataset: even though we drop all the rows contaning missing values, we still have around 3000 samples to train our model. This is sufficient for a regression model with 5 features.

Feature Sampling

To have a better distribution, we’ll normalize our data.

def normalizeDataFrame(df):

return (df - df.min()) / (df.max() - df.min())

def saveDataset(df_to_save, original_df, path):

df_to_save.insert(0, "Entity", original_df["Entity"])

df_to_save.insert(1, "Year", original_df["Year"])

df_to_save.to_csv(path, index=False)

CO2 emissions prediction

normalized_df_co2 = normalizeDataFrame(df_co2.iloc[:, 2:])

normalized_df_co2_to_save = normalized_df_co2.copy()

saveDataset(normalized_df_co2_to_save, df_co2, "../data/co2/normalized_co2_dataset.csv")

normalized_df_co2

| Electricity from fossil fuels (TWh) | Electricity from nuclear (TWh) | Electricity from renewables (TWh) | Value_co2_emissions_kt_by_country | Land Area(Km2) | |

|---|---|---|---|---|---|

| 0 | 0.000031 | 0.0 | 0.000142 | 0.000070 | 0.065321 |

| 1 | 0.000017 | 0.0 | 0.000229 | 0.000067 | 0.065321 |

| 2 | 0.000025 | 0.0 | 0.000256 | 0.000095 | 0.065321 |

| 3 | 0.000060 | 0.0 | 0.000288 | 0.000113 | 0.065321 |

| 4 | 0.000064 | 0.0 | 0.000256 | 0.000095 | 0.065321 |

| ... | ... | ... | ... | ... | ... |

| 3644 | 0.000675 | 0.0 | 0.001519 | 0.001028 | 0.039134 |

| 3645 | 0.000588 | 0.0 | 0.001968 | 0.000965 | 0.039134 |

| 3646 | 0.000720 | 0.0 | 0.002499 | 0.001155 | 0.039134 |

| 3647 | 0.000706 | 0.0 | 0.002096 | 0.001097 | 0.039134 |

| 3648 | 0.000656 | 0.0 | 0.001918 | NaN | 0.039134 |

3649 rows × 5 columns

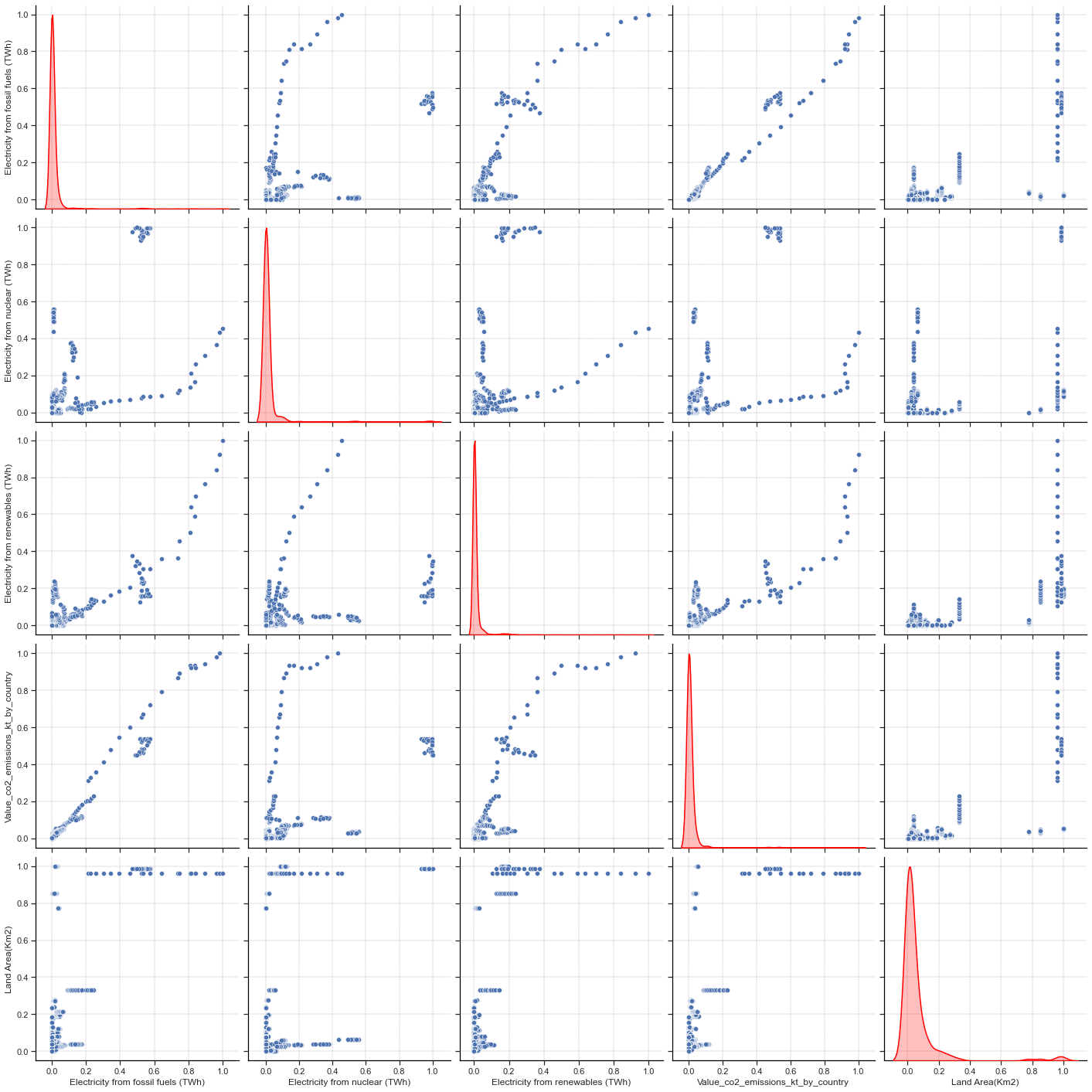

sns.pairplot(normalized_df_co2, height=4, kind="scatter", diag_kind="kde", diag_kws={"linewidth": 1.5, "color": "red"})

We can clearly see correlations on scatter plot between features. For feature distribution, is hard to see because countries that are emitting a lot of CO2 are so few compared to those who are not. Computing logarithmic values of the dataset could be a possibility but many samples have 0 as value for the Electricity from nuclear (TWh) feature. As log(0) is not defined, we can’t use this method. Let’s try to remove some outliers.

top_outliers_co2 = df_co2['Value_co2_emissions_kt_by_country'].quantile(0.95)

normalized_df_co2_2 = normalizeDataFrame(df_co2[df_co2['Value_co2_emissions_kt_by_country'] < top_outliers_co2].iloc[:, 2:])

normalized_df_co2_2_to_save = normalized_df_co2_2.copy()

saveDataset(normalized_df_co2_2_to_save, df_co2[df_co2['Value_co2_emissions_kt_by_country'] < top_outliers_co2], "../data/co2/normalized_co2_dataset_without_outliers.csv")

print(normalized_df_co2_2.shape)

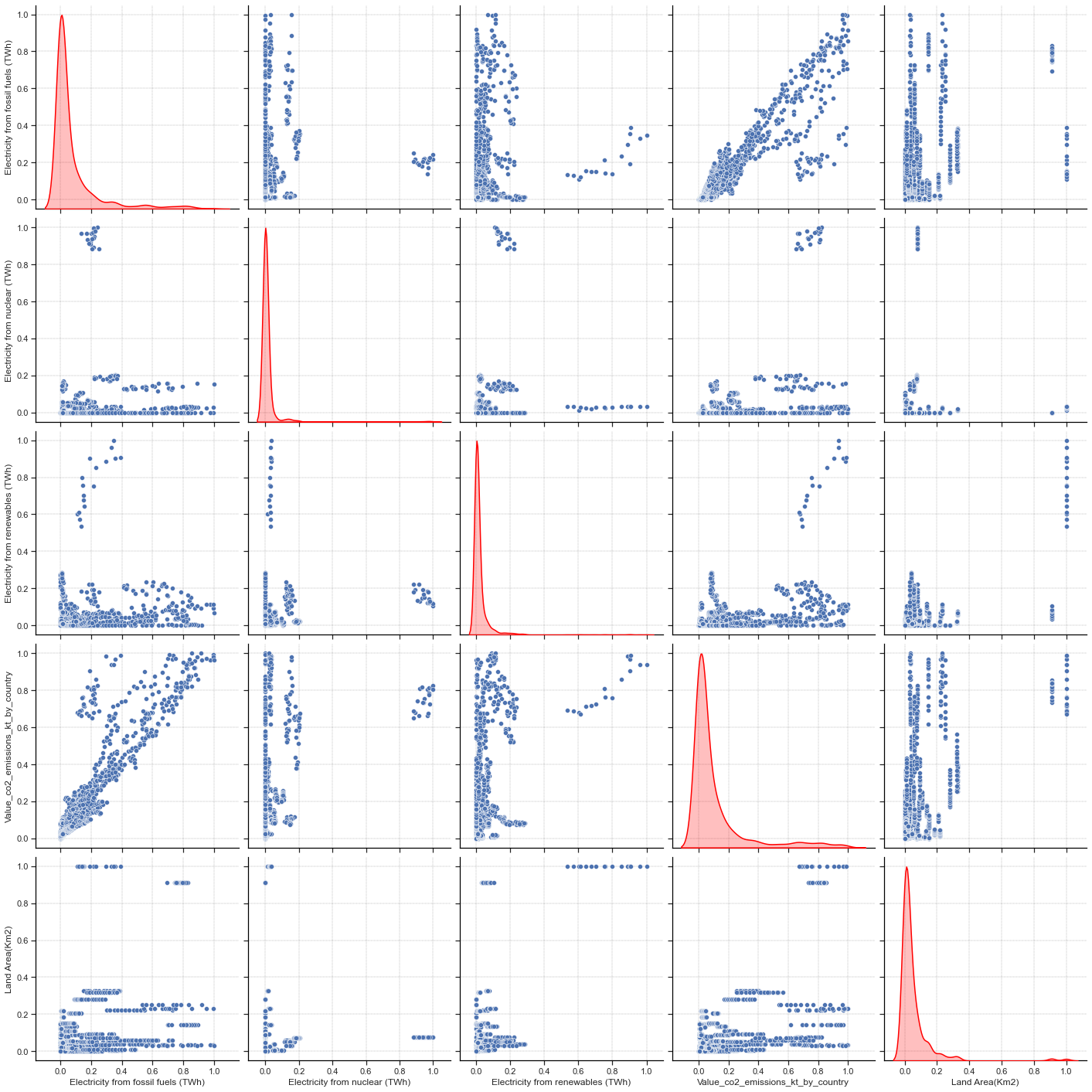

sns.pairplot(normalized_df_co2_2, height=4, kind="scatter", diag_kind="kde", diag_kws={"linewidth": 1.5, "color": "red"})

plt.savefig("../img/pairplot_co2.png", dpi=200, bbox_inches="tight")

(3059, 5)

This is better ! We still have enough samples to train our model and it is a bit easier to see that our data are quite randomly sampled. We have continuous samples and it might seems that the response feature is following a Weibull or Log-normal distribution.

There is also sufficient variation across all features to support statistical modeling.

Primary energy consumption per capita

normalized_df_pe = normalizeDataFrame(df_pe.iloc[:, 2:])

normalized_df_pe_to_save = normalized_df_pe.copy()

saveDataset(normalized_df_pe_to_save, df_pe, "../data/pe/normalized_pe_dataset.csv")

normalized_df_pe

| Access to electricity (% of population) | Access to clean fuels for cooking | Renewable energy share in the total final energy consumption (%) | Primary energy consumption per capita (kWh/person) | gdp_per_capita | |

|---|---|---|---|---|---|

| 0 | 0.003659 | 0.062 | 0.468451 | 0.001152 | NaN |

| 1 | 0.028581 | 0.072 | 0.474802 | 0.000902 | NaN |

| 2 | 0.082603 | 0.082 | 0.393898 | 0.000803 | 0.000547 |

| 3 | 0.136573 | 0.095 | 0.381716 | 0.000876 | 0.000638 |

| 4 | 0.190513 | 0.109 | 0.460641 | 0.000778 | 0.000806 |

| ... | ... | ... | ... | ... | ... |

| 3644 | 0.418333 | 0.298 | 0.852770 | 0.012292 | 0.010961 |

| 3645 | 0.434707 | 0.298 | 0.858601 | 0.011684 | 0.009102 |

| 3646 | 0.448824 | 0.299 | 0.835381 | 0.013108 | 0.009260 |

| 3647 | 0.461066 | 0.301 | 0.848605 | 0.011439 | 0.009763 |

| 3648 | 0.521484 | 0.304 | 0.852770 | 0.010207 | 0.008935 |

3649 rows × 5 columns

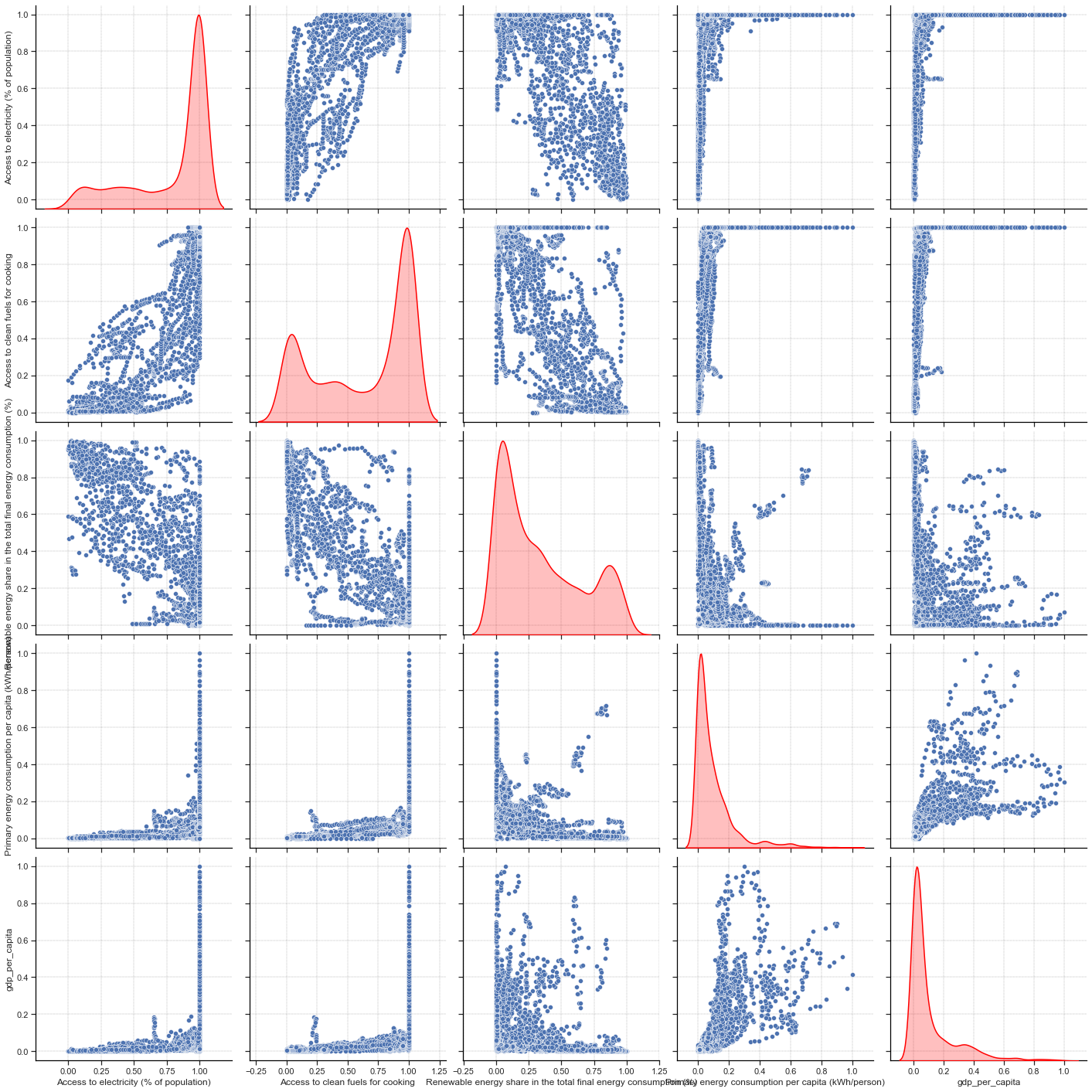

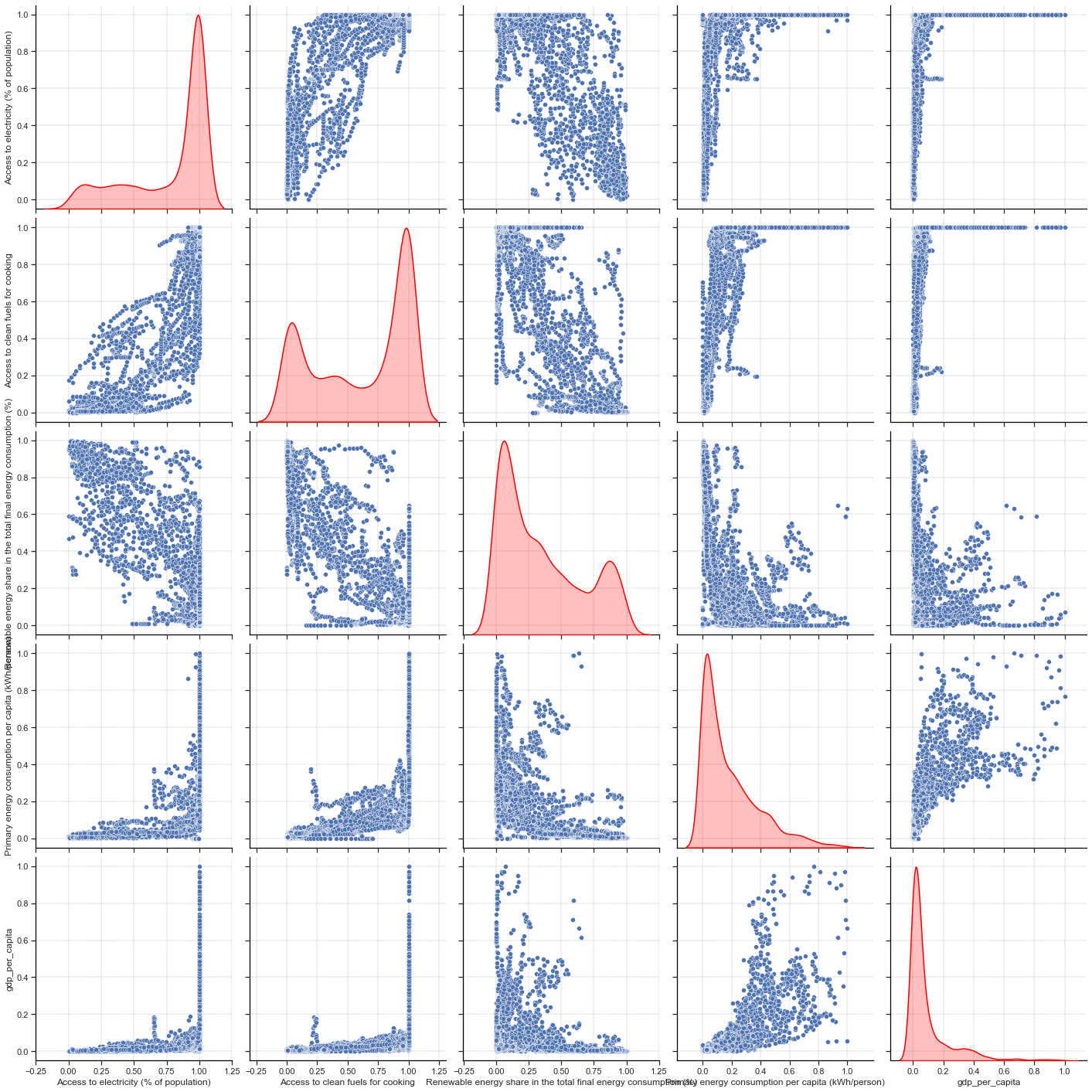

sns.pairplot(normalized_df_pe, height=4, kind="scatter", diag_kind="kde", diag_kws={"linewidth": 1.5, "color": "red"})

For this problem, it is easier to see that all the input features are well distributed: our data are more randomly sampled than for the CO2 problem. However, it is a bit more difficult to see that for the response feature. We can try to remove some outliers to have a better distribution.

top_outliers_pe = df_pe['Primary energy consumption per capita (kWh/person)'].quantile(0.95)

normalized_df_pe_2 = normalizeDataFrame(df_pe[df_pe['Primary energy consumption per capita (kWh/person)'] < top_outliers_pe].iloc[:, 2:])

normalized_df_pe_2_to_save = normalized_df_pe_2.copy()

saveDataset(normalized_df_pe_2_to_save, df_pe[df_pe['Primary energy consumption per capita (kWh/person)'] < top_outliers_pe], "../data/pe/normalized_pe_dataset_without_outliers.csv")

print(normalized_df_pe_2.shape)

sns.pairplot(normalized_df_pe_2, height=4, kind="scatter", diag_kind="kde", diag_kws={"linewidth": 1.5, "color": "red"})

plt.savefig("../img/pairplot_pe.png", dpi=200, bbox_inches="tight")

(3466, 5)

Only by removing 5% of the outliers, we can see that the distribution of the continuous response feature is better ! Moreover, the shape of this distribution looks like a Log-normal distribution.

There is also more variation across all these features to support statistical modeling than for the CO2 emissions prediction problem, which is good.